Retrieval-Augmented Generation connects LLMs to your live, proprietary data — delivering accurate, current, and trustworthy AI that transforms how your business operates.

Standard Large Language Models are powerful, but enterprise deployment reveals critical pitfalls that RAG is designed to solve.

LLMs can invent facts, leading to flawed business decisions, damaged reputations, and a fundamental lack of trust in your AI systems.

Trained on static data with a knowledge cut-off date, LLMs can't provide reliable advice on recent events, regulations, or market shifts.

Standard models are unaware of your internal data, processes, and customer histories, resulting in generic, unhelpful, and impersonal responses.

Keeping a traditional LLM up-to-date requires frequent, resource-intensive, and expensive re-training cycles to absorb new information.

RAG is an advanced AI framework that enhances LLMs by connecting them to external, verifiable knowledge sources in real-time. Instead of relying on static, pre-trained knowledge, RAG retrieves relevant, up-to-date information to construct a factually grounded and contextually aware response.

Accesses live data to ensure responses are always current.

Uses your proprietary data to provide business-specific answers.

Grounds answers in retrieved facts, reducing hallucinations.

"Our refund policy typically allows returns within 30 days. Enterprise clients may have different terms depending on their agreement. Generally, a 15% restocking fee applies. Please contact support for specific details."

"Enterprise clients on annual contracts receive a pro-rated refund for unused months with no restocking fee. Refund requests must be submitted within 14 days of the billing cycle end."

See how Retrieval-Augmented Generation transforms knowledge access across sectors, delivering accurate, cited answers from your proprietary data.

RAG enables healthcare providers to instantly retrieve relevant clinical guidelines, patient histories, and research findings. Medical professionals can ask natural language questions and receive accurate, cited answers from your organisation's knowledge base.

For patients with eGFR 30-45: reduce dose to 500mg twice daily. For eGFR <30: contraindicated.

Transform how your teams handle policy questions and claims processing. RAG connects underwriters and claims handlers to the exact policy clauses, precedents, and guidelines they need, reducing resolution time and improving accuracy.

Yes, sudden and accidental water damage from burst pipes is covered under Section A - Dwelling Coverage.

Legal professionals can query vast repositories of case law, contracts, and regulatory documents using natural language. RAG delivers precise, cited answers that save hours of manual research while maintaining the accuracy the profession demands.

The standard limitation period is 6 years from the date of breach under s.5 Limitation Act 1980.

Educational institutions can deploy RAG-powered assistants that answer student queries from curriculum materials, research papers, and institutional knowledge. Support staff can access policies and procedures instantly.

Key themes: Revenge and justice, Appearance vs reality, Mortality and the afterlife, Corruption and decay.

RAG is a rapidly advancing frontier. We stay at the forefront of these trends to build solutions that are not just current, but future-proof.

This paradigm moves RAG from a passive fetch-and-answer tool to a proactive problem-solver. An AI 'agent' can autonomously break down a complex query into multiple steps, decide which data sources to query (e.g., a document base, then a live API), and synthesise the findings into a comprehensive, multi-faceted answer.

Instead of just searching for text, GraphRAG leverages knowledge graphs to understand the relationships *between* data points. This allows for far more nuanced and precise retrieval, answering complex questions like 'Which of our projects used the same supplier as the project led by John Doe?' with exceptional accuracy.

The future is not just text. Multi-Modal RAG expands retrieval capabilities to include images, audio clips, and video content. An LLM could 'watch' a product demo video or 'look' at a technical diagram to answer a user's question, opening up a vast new landscape of enterprise knowledge.

Building enterprise-grade RAG requires more than off-the-shelf tools. It demands a deep understanding of AI, data architecture, and your specific business needs.

We don't force pre-built tools. We architect RAG systems from the ground up or significantly customise existing frameworks (like LangChain or LlamaIndex) to perfectly align with your specific data, workflows, and outcomes.

As an ISO 27001 Certified company, we implement bank-grade security, robust access controls, end-to-end encryption, and comprehensive auditing. We architect for compliance with regulations like GDPR.

Our team possesses deep technical knowledge in advanced retrieval, vector databases, and emerging paradigms like Agentic RAG and GraphRAG to ensure your solution is not just current, but built for tomorrow.

You retain full ownership of the bespoke RAG systems and any unique IP developed during our engagement, empowering you to fully leverage your investment and maintain a long-term competitive edge.

ISO 27001 Certified

Enterprise-Grade Security

We employ a refined, collaborative process to ensure your bespoke RAG solution is strategically aligned and delivers measurable impact.

A deep dive into your business objectives, data landscape, and key challenges to identify RAG use cases that will deliver the most impact.

We de-risk your investment by validating the technical approach, data readiness, and ROI, culminating in a 1-3 month pilot that demonstrates tangible value.

An agile development phase where we architect and build your bespoke RAG solution, integrating the optimal retrieval strategies, vector databases, and LLMs.

Beyond launch, we provide robust support, continuous performance monitoring, and proactive optimisations to ensure your AI asset evolves and delivers lasting value.

Recruitment & HR

Recruitment & HR15-minute AI conversation replaces CV spam. Personalised 'why you match' explanations for every job. Mutual interest required - no more ghosting.

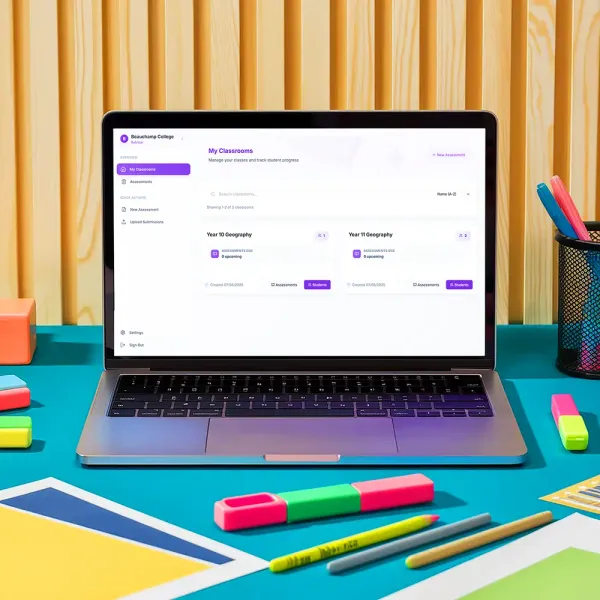

Education Technology

Education TechnologyAdvanced AI assessment tool helping geography teachers save 50% of marking time whilst providing better feedback.

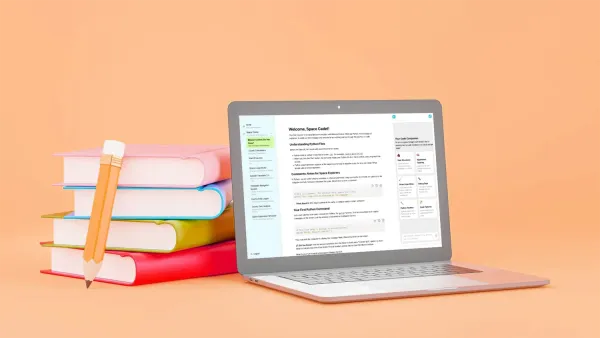

Education

EducationAn innovative AI tutor platform helping UK schools deliver engaging computer science education through personalised programming instruction and support.

We offer a full spectrum of services to help you harness the power of Retrieval-Augmented Generation.

End-to-end design, development, and deployment of bespoke RAG solutions, meticulously tailored to your specific enterprise data and use cases.

Comprehensive AI readiness assessments, identification of high-impact RAG opportunities, and expert guidance on navigating the complex technology landscape.

Seamless integration of advanced RAG capabilities into your existing enterprise applications, platforms, and workflows for enhanced performance.

Ongoing maintenance, diligent monitoring, and continuous improvement of deployed RAG solutions to ensure they remain effective, secure, and aligned with your goals.

Key terms and concepts in the world of Retrieval-Augmented Generation, demystified.

A numerical representation of text (or other data) in a high-dimensional space. Words and sentences with similar meanings are located closer together, enabling the 'semantic' part of semantic search.

A search technique that understands the intent and contextual meaning of a query, rather than just matching keywords. It's the core technology that allows RAG to find relevant information.

The process of breaking down large documents into smaller, meaningful pieces or 'chunks'. This is crucial for efficient indexing and for providing the LLM with focused, relevant context.

The collection of documents, data, and other information sources that a RAG system retrieves from. This can include anything from PDFs and databases to SharePoint sites.

A measure of how well an LLM's response is based on the provided context. A 'grounded' answer is factually consistent with the source information, while an 'ungrounded' one is a hallucination.

An architectural approach where the RAG system is not tied to a single Large Language Model. This provides the flexibility to swap or upgrade the underlying LLM as better models become available.

Your common questions about Retrieval-Augmented Generation, answered by our experts.

Hallucinations occur when an LLM generates information not grounded in its training data. RAG combats this directly by forcing the LLM to base its answer on real-time, factual information retrieved from your trusted knowledge base. Before generating a response, the model is given a package of relevant, verifiable context. This acts as a 'source of truth', compelling the model to synthesise answers from provided facts rather than inventing them, dramatically increasing the factual accuracy and trustworthiness of the output.

They are different tools for different jobs, but for many enterprise use cases, RAG is a more practical and effective solution. Fine-tuning teaches a model a new skill or style by adjusting its internal parameters, which is resource-intensive. RAG, on the other hand, teaches a model new knowledge by giving it access to external information. RAG is superior when you need to eliminate hallucinations, ensure answers are based on the most current data, and provide context from proprietary documents. For some advanced use cases, a hybrid approach using both can be optimal.

A wide variety of data sources can be integrated into a RAG knowledge base. This includes unstructured data like PDFs, Word documents, PowerPoint presentations, SharePoint sites, and website content, as well as structured data from databases (like SQL or NoSQL), CRMs (like Salesforce), and ERP systems. The key is to process and index this data effectively, often using vector embeddings, so the retrieval system can find the most relevant information regardless of its original format.

A vector database is a specialised database designed to efficiently store and query vector embeddings. In a RAG system, when your documents are converted into embeddings, a vector database is used to index them. When a user asks a question, their query is also converted into an embedding, and the vector database performs an incredibly fast similarity search to find the most relevant document chunks. Its performance is critical for a fast and accurate retrieval step.

The cost varies depending on complexity, but it's a strategic investment in turning your data into a valuable asset. We typically start with a 'Viability Testing & Proof-of-Concept' phase, budgeted between £20,000 to £60,000, to validate the approach and demonstrate ROI quickly. Full-scale custom development engagements then start from £60,000 and are scoped based on the project's specific requirements, such as the number of data sources, complexity of integration, and performance needs. RAG is generally more cost-effective long-term than continuous LLM re-training.

Security is paramount in every solution we build. As an ISO 27001 Certified company, we adhere to strict security protocols. Your data never leaves your control and is handled with the utmost care within your own secure environment. We implement bank-grade security measures including robust role-based access controls (RBAC), end-to-end encryption for data in transit and at rest, and comprehensive audit logging to meet stringent compliance requirements like GDPR. Our architecture ensures that the LLM only receives small, relevant snippets of information to answer a query, never wholesale access to the entire knowledge base.

Book a free strategy session and discover your AI advantage with our expert team

Typical response time: Within 24 hours

Rethinking What's Possible with AI.

Portland House, Belmont Business Park, Durham DH1 1TW

contact@openkit.co.uk© 2026 OpenKit. All rights reserved. Company Registration No: 13030838

Thank you for your interest! Enter your project details below and our team will get in contact within 24 hours.

"Having completed our second major AI agent development project with OpenKit, I can confidently say they're the real deal. Our system analyses large, complex and poor quality documents with remarkable accuracy, surpassing solutions like GPT4 and other AI legal tools."

"OpenKit's professionalism and creativity shone through our collaboration, ultimately leading to the creation of an AI assistant that has transformed the way our residents engage with and comprehend air pollution information in London."

"Working with OpenKit to develop our custom AI agent services has been transformative for our business. Their solution delivers exceptional performance compared to frontier models while remaining remarkably easy to verify and trust."

"Their deep understanding of our business needs, coupled with their expertise in GPT and Cloud software-services, enabled them to navigate complexities and deliver a bespoke AI solution tailored to our operations."

"OpenKit developed an impressive AI assistant, providing an engaging and informative way for our residents to understand air pollution. Their expertise turned complex data into accessible insights."

"OpenKit provided advice and proposed solutions rooted in the most recent AI and prompt-engineering research, genuinely invested in our success. Their expertise in cutting-edge AI technologies was invaluable."